Intelligence Beyond Humans: The Interdisciplinary Perspectives Missing from AI Discourse

Part 2 of a series examining the historical origins and fundamental flaws in how we talk about AI

In my first post in this series, I explored how the term "artificial intelligence" originated not from scientific or philosophical grounding, but from academic positioning and marketing. I traced how John McCarthy coined the term in 1956, deliberately distancing his work from cybernetics and other competing approaches.

But there's an even more fundamental problem underlying our confused AI terminology: What do we actually mean by "intelligence" itself? Even before we add the qualifier "artificial," the concept of intelligence remains contested across scientific disciplines.

The problem deepens when we try to apply human-centric notions of intelligence to fundamentally non-human systems. Our default frameworks, shaped by psychology, cognitive science, and our own experiences as humans, create unrealistic expectations and obscure what AI systems actually do and don't do.

In this post, I'll explore how researchers across disciplines understand intelligence through frameworks that extend beyond both human and computational boundaries. These perspectives rarely enter mainstream AI discussions, yet they offer valuable insights that could reshape how we think about, develop, and evaluate AI systems.

The Problem with Human-Centric Intelligence Frameworks

The earliest scientific theories of intelligence come from Psychology, with definitions that were developed specifically for humans. While psychologists have proposed various theories of intelligence over the past century, they all become problematic when applied to non-human systems.

Historically AI researchers have also defined artificial varieties of “intelligence” with respect to human intelligence. Even John McCarthy, who coined the term "Artificial Intelligence," defined it in human terms:

"The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves."

More recent definitions of “intelligence” or “artificial intelligence” from the AI field followed in this tradition. IBM defines “Artificial Intelligence” as “[T]echnology that enables computers and machines to simulate human learning, comprehension, problem solving, decision making, creativity and autonomy.”

This human-centric framing creates an immediate problem when applied to AI systems, which differ fundamentally from humans. Humans are embodied, evolved beings with personal identities and social relationships, while today's AI systems lack these qualities. Our working memory is severely limited to about 3-5 meaningful items at a time, but we excel at navigating ambiguity and learning from limited examples. By contrast, AI systems have vast memory capacity but struggle with basic logic and contextual understanding.

These differences explain why we see such inconsistent capabilities from AI models. The same systems that can pass the Bar Exam can fail miserably at basic counting problems and provide fake citations for legal cases.

To get a more comprehensive grasp on intelligence, we need a broad, interdisciplinary approach. This work is already underway at the Santa Fe Institute, where researchers from diverse fields have come together to develop more nuanced perspectives on intelligence.

Intelligence Beyond Human Cognition: Four Interdisciplinary Perspectives

In my previous post, I described how AI as a discipline emerged from Cybernetics, a field that concerned itself with the interdisciplinary study of the structure and behavior of complex systems. This interdisciplinary approach enabled broad concepts (such as feedback loops) to be abstracted from individual domains and substrates. Cyberneticists found that many of the same systems of control that applied to biology could also be applied to robotics, systems engineering, economics, and sociology, enabling cohesive theories that transcend domains.

Following in this tradition, we can study intelligence across domains to develop a more cohesive and comprehensive theory of what exactly we mean when we say “intelligence”, and how that may apply to artificial systems. Fortunately, this initiative is already underway at the Santa Fe Institute, an organization focused on the multidisciplinary study of complex systems. The first workshop report for this project includes broad perspectives on intelligence from leading researchers across domains. This was followed by more in-depth workshops on Embodied, Collective, and Evolutionary perspectives on intelligence. The differing views that emerged in the workshops can be seen as complimentary angles, rather than competing approaches. Often the same system can reasonably be viewed from multiple perspectives, with each contributing to a more complete picture of the nature of its intelligence. The approaches that are discussed in these workshops correspond with other efforts to build interdisciplinary understandings and frameworks for intelligence.

Collective Intelligence: When the Whole Exceeds Its Parts

From this perspective, intelligence arises when many individual components or agents interact to solve problems adaptively. While it’s common for intelligent systems to be composed of many basic components, systems with collective intelligence are characterized by the autonomous nature of their component parts and the emergence of intelligence as a direct result of autonomous individual behavior at scale. For example, ant colonies and human social networks exhibit forms of collective intelligence that transcend the capabilities of any single member.

Collective intelligence systems balance important tradeoffs:

Coordination through control vs. flexibility through autonomy

Global vs. local information sharing

Complex vs. simple network structures

These tradeoffs shape the emergent intelligence of the system and determine how it adapts to different challenges.

Embodied Intelligence: Why Bodies Matter for Thinking

Embodied Intelligence frames cognition as the interplay between brain, body, and environment rather than abstract computation. This view holds that an agent's physical form shapes its learning and cognitive capabilities, with bodily constraints and sensorimotor experiences serving not as limitations but as essential components that ground concepts, simplify problems, and enable intelligent behavior. Embodied intelligence concerns itself with three main concepts:

Embodiment: How physical bodies shape cognitive processes

Grounding: How concepts acquire meaning through connection to the physical world

Situatedness: How ongoing interactions with environments shape cognition

Children learn through interactions with their environment in a process called self-generated learning. Some experts believe this embodied approach is essential for learning key concepts like causation, while others view it as just one possible approach to learning.

Evolutionary Intelligence: Nature's Approach to Problem-Solving

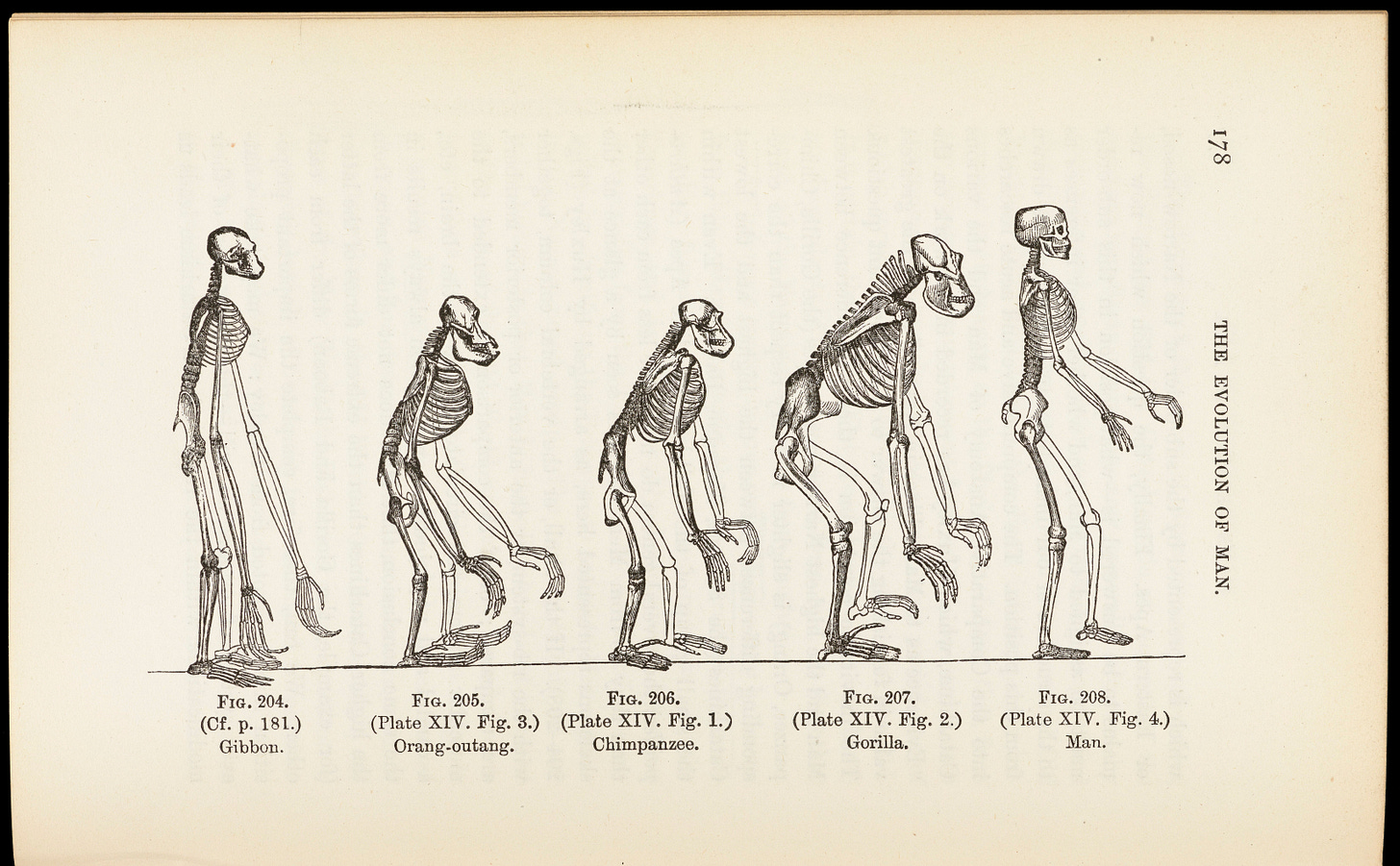

From the evolutionary perspective, we can understand intelligence as a characteristic that emerges through natural selection processes, where cognitive abilities and problem-solving strategies develop over generations in response to environmental challenges. Evolution provides the only proven method for creating general intelligence like that found in humans and animals. Several characteristics of biological evolution enable the development of intelligent organisms:

Changing evolutionary pressures drive robust, generalizable solutions that work across varied conditions

Natural feedback loops between agents and environments drive ongoing novelty and diversity

Constraints narrow the search space of possible characteristics, directing evolution toward effective solutions

Open-endedness enables unlimited diversity and complexity over time

The evolutionary perspective on intelligence deals with changes to populations over time. A closely-related concept, Ecological Rationality, operates at the individual scale and the immediate environment. This approach views intelligence as the degree to which an agent’s toolbox of cognitive strategies and heuristics is adapted to its environment.I

Model-Based Intelligence: The Conventional Approach

From this perspective, intelligence is characterized by the ability to build and manipulate internal models to generate predictions. By simulating models of oneself and the environment, agents can reason about hypotheticals and plan actions without direct experience. Key areas include perception models, simulation capabilities, prediction of future states, and abstraction/analogy formation. This approach is the most common understanding of intelligence in modern Cognitive Science, Neuroscience, and AI.

Common Threads: Recurring Themes Across Perspectives

Across all of these perspectives, there are some recurring themes highlighting important questions and considerations that repeatedly arise in the study of intelligence.Several important themes emerge across these diverse approaches:

Metacognition: The capacity to monitor, evaluate, and regulate one's own cognitive processes. This "thinking about thinking" enables intelligent systems to select appropriate strategies for a given situation and recognize knowledge limits.

Constraints: Across disciplines, researchers find that constraints don't merely limit intelligence but actively shape it in beneficial ways. Limitations in resources, communication, and physical embodiment can promote more efficient, robust solutions.

Multi-Scale Learning: Intelligence emerges from learning processes that operate at different complimentary timescales, from evolutionary adaptation to real-time processing. The interplay between these levels may be essential for robust intelligence.

While these perspectives converge on several themes, the biggest questions around intelligence remain contested. Experts disagree about whether or not there is a meaningful distinction between narrow competence and general intelligence, a debate with direct implications for discussions about Artificial General Intelligence in AI. There is also uncertainty regarding the role of consciousness and comprehension, the necessity of embodiment for learning, and the importance of agency and purpose.

Current AI Through a Broader Lens: What's Missing?

These interdisciplinary perspectives reveal how narrow our current approaches to AI have become, constrained by path dependence on historical approaches to AI and computation as a whole. When we examine today's AI systems through these broader lenses, we can identify both what they incorporate and what crucial elements they lack:

What Current AI Systems Include:

Current AI systems primarily operate from the model-based perspective, focusing on pattern recognition and internal representations. They also exhibit some degree of multi-scale learning, from pre-training on massive datasets to in-context adaptation within conversations. Multi-agent AI systems attempt to incorporate elements of collective intelligence and embodied intelligence, though typically in limited ways.

What's Missing:

Embodiment and Situatedness

While debate continues about whether physical embodiment is necessary for general intelligence, evidence suggests that learning through interaction with environments provides richer data and more efficient learning. Today's AI systems largely lack this grounding, which may explain their struggles with causality and contextual understanding.

Beneficial Constraints

Unlike natural intelligence, which thrives on constraints, most AI development aims to remove limitations. Yet constraints often drive innovation and efficiency. A telling example comes from DeepSeek AI, which in 2024 faced severe hardware restrictions due to U.S. export controls. These constraints forced them to develop an unorthodox approach, resulting in their R1 model that matched the performance of much larger models while using significantly fewer resources.

Evolutionary Robustness

Natural intelligence emerges through evolution's ability to produce resilient solutions through changing pressures and feedback loops. Current AI systems rarely incorporate these evolutionary principles, potentially limiting their adaptability and generalization capabilities.

Integration Across Multiple Approaches

Intelligence can take multiple forms and different definitions are appropriate in different contexts. There is broad agreement that no single theoretical framework can fully explain intelligence, and researchers increasingly recognize the need for the integration of multiple perspectives. While integration across approaches remains challenging, some current AI systems do attempt to bridge multiple perspectives:

Multi-agent systems combine model-based approaches with elements of collective intelligence, allowing individual AI agents to interact and solve problems collaboratively. For example, systems like AutoGen create networks of specialized AI agents that communicate to accomplish complex tasks.

Reinforcement learning combines model-based approaches with ecological perspectives, as systems learn to adapt to their environments through feedback. Reinforcement learning from human feedback (RLHF) further extends this by incorporating social dynamics through human evaluations.

Robotics research represents perhaps the most integrated approach, combining embodied intelligence with model-based reasoning and adaptive learning. Projects like Boston Dynamics' robots demonstrate how physical embodiment creates unique challenges and opportunities for AI systems.

Despite these promising efforts, truly comprehensive integration remains an area for growth. Future AI might benefit from more systematically combining model-based reasoning with evolutionary algorithms, embodied learning, and sophisticated collective intelligence principles, creating systems that can leverage multiple complementary approaches to problem-solving. This integration could produce AI with greater robustness, adaptability, and generalization capabilities beyond what we see today.

Final Thoughts

Looking at intelligence through these interdisciplinary lenses offers more than theoretical insights – it provides practical direction for AI development. While today's systems have achieved impressive results in narrow domains, they've developed along a constrained path that lacks many qualities that make natural intelligence so robust and adaptable.

By expanding our understanding beyond computational models to include embodied, evolutionary, and collective perspectives, we can identify specific opportunities for innovation. The inconsistent capabilities of large language models, which are impressive at some tasks while failing at seemingly simple ones, become more understandable when we recognize what's missing from their development framework.

Recognizing that intelligence can take multiple forms, with different definitions appropriate in different contexts, gives us a more nuanced foundation for characterizing, developing, and evaluating AI models. Rather than fixating on the comparison of AI systems to human-like general intelligence, we might build a more accurate and useful understanding the intelligence we find in AI systems by taking a broader interdisciplinary perspectives. By expanding our understanding of intelligence and applying a wider range of approaches in AI, we might explore the unique forms of intelligence that artificial systems could manifest through different arrangements of capabilities and constraints.

What’s Next?

In my next post, I'll examine how our confused AI terminology has evolved through successive waves of hype and disillusionment. I'll trace the historical rebrands of AI, from symbolic AI to expert systems to machine learning to deep learning to today's generative AI, investigating how marketing frameworks further mystify an already complex field.

I'll dissect the buzzwords that dominate today's AI landscape, showing how terms like "artificial general intelligence," "AI agents," and "agentic AI" create confusion about what these systems actually do and don't do. By understanding the historical pattern of AI terminology shifts, we can better navigate the current landscape of claims and capabilities.

The fourth and final post in this series will then propose a more pragmatic framework for understanding AI systems based on their actual capabilities rather than marketing terminology, providing practical guidance for evaluating AI claims with genuine skepticism

Further Reading

The Santa Fe Institute’s Foundations of Intelligence workshops: These four workshops offer a fascinating overview of a wide range of perspectives on Intelligence.

Towards an interdisciplinary framework about intelligence: This paper offers a more structured and cohesive interdisciplinary framework for intelligence

Paul S. Rosenbloom’s work on Cognitive Architectures and Models: Dr. Roesenbloom has spent his career researching cognitive architectures from the perspectives of both AI and Cognitive Science. He’s written dozens of relevant and insightful papers in this area. Though not a peer-reviewed paper, I found his published draft, Defining and Explorting the Intelligence Space to be a valuable and easy to understand resource in understanding the many approaches and concepts in the Intelligence Space.

Evolution of Brains and Computers: The Roads Not Taken: This paper provides a more in-depth overview of evolutionary perspectives on intelligence and how they relate to AI systems.