What is AI? The Origins of a Confused Terminology

Part 1 of a series examining the historical origins and fundamental flaws in how we talk about AI

The Origins of "Artificial Intelligence"

In my last post, I discussed approaching AI claims with skepticism. But there's an even more fundamental problem we need to address: What are we even talking about when we say "artificial intelligence"?

In recent years tech news outlets, blogs, and social media posts have been brimming with a dizzying array of terms: AI, Machine Learning, Deep Learning, Agentic Systems, Generative AI, and more. These terms are often used interchangeably, inconsistently, or simply incorrectly by nearly everyone, from marketers to researchers and tech executives.

This isn’t just splitting hairs over definitions. This isn't just semantic nitpicking. When everyone uses the same words to mean different things (or different words to mean the same thing), we end up with real consequences: difficulty evaluating claims, comparing systems, understanding capabilities, and implementing solutions responsibly.

In my view, the common terminology we use to discuss AI systems is fundamentally inadequate, acting more as marketing constructs than as coherent technical concepts with clear definitions. Rather than trying to nail down precise definitions for inherently fuzzy terms, we'd be better served by focusing on what systems actually do: their specific capabilities, limitations, and requirements.

I'd originally planned to cover all aspects of AI terminology in one post, but the depth of this topic deserves a more thorough treatment. So I'm breaking this into a multi-part series, beginning today with the historical origins of AI terminology. This is the story of how John McCarthy chose the term "artificial intelligence," what it originally encompassed, and how early schisms shaped the field for decades to come.

From Cybernetics to Computing

Descartes conceptualized the notion of a machine with human-like intelligence in the 1600s and in the 1830s Ada Lovelace envisioned machines that could perform logical operations on symbols, predicting modern-day computing.

Beyond this speculation, serious attempts to actually build some form of “thinking machine” began in the 1940s with the emergence of Cybernetics. This field formally originated in the 1940s as the study of control and communication in complex biological or mechanical systems. Cybernetics is an inherently interdisciplinary field, with its core focus on defining basic principles of control mechanisms (such as feedback loops) and systems behavior that transcends domains. It is arguably a common ancestor to the modern disciplines of Cognitive Science, Computer Science, Artificial Intelligence, Neuroscience, and other fields that concern themselves with the control of complex systems.

Cybernetics was at its peak in a time before modern computing had matured as an engineering discipline. Electronic digital computers had not yet taken dominance as the hardware upon which all software runs, and there was no clear distinction between hardware and software at all; This is the intellectual environment in which many approaches to AI were first conceptualized. Cyberneticists saw machines, biological systems, brains, and social systems as governed by the same basic principles of communication and control. Many researchers who later went on to work under the umbrella of “artificial intelligence” had their start in Cybernetics.

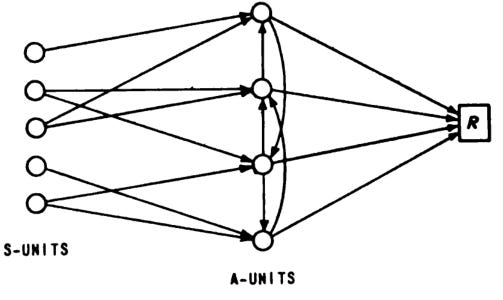

For example, the artificial neuron was developed in the 1940s, leading to the Frank Rosenblatt’s Perceptron. This approach attempted to simulate biological brain cells. The Perceptron laid the technical foundations for many AI approaches that are still used to this day, including artificial neural networks and the Large Language Models (LLMs) that power AI Chatbots like ChatGPT. Rosenblatt deliberately differentiated his work from “artificial Intelligence”, instead calling it “Perceptron Theory”. This is not the only example of how professional egos and rivalries shaped terminology. The term “artificial intelligence” itself arose over the same debate.

The Birth of the Term

At the core of the story of AI terminology, we have our central figure, John McCarthy, widely heralded as the “father of AI”. His historic role as the founder of AI is disputed (he was one of many impactful people and institutions in the origin of AI), however he is undoubtably the father of AI hype.

McCarthy coined the term “artificial intelligence” in his proposal for the famed Dartmouth Workshop of 1956, in which a group of researchers gathered to explore how machines might simulate aspects of human intelligence. While his term had not yet been widely adopted, a variety of other terms were used in referenced to similar concepts. Terms like “Self-organizing Systems”, “Automata Theory”, “Thinking Machines”, “heuristic programming”, ”automatic coding”, and others were common at the time.

So why did McCarthy feel a new term was needed to begin with? McCarthy’s early work was focused on automata, automatic mechanical systems that were a popular area of study in cybernetics at the time (think automatic clocks or old-fashioned robots).

After working with Mathematician, Claude Shannon’s book *Automata Studies,* McCarthy was frustrated by the lack of emphasis on “Intelligent Automata” in the book and dismissed the work of other contributors who were not focused on that area. Soon afterwards, he and his collaborators wrote the proposal for the Dartmouth Workshop, defining the goal of workshop:

The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made on one or more of these problems if a carefully selected group of scientists work on it together for a summer.

It was a bold proposal, and no- they didn’t make much progress in solving AI over the summer. A few specific areas of interest are described in the proposal, giving insight into what topics AI was considered to cover at the time:

Automatic Computers (This essentially refers to compilers, a technology that is now well developed and is widely used in programming)

How can a computer be programmed to use a language

Neuron Nets

Theory of the Size of a Calculation (This is the concept of computational complexity - how many individual computations need to be done to complete a program).

Self Improvement

Abstractions

Randomness and Creativity

Interestingly, these topics cover a bizarre intersection of areas that ended up being relatively easy to accomplish (e.g. automatic computers) and topics that are barely conceptually understood now (creativity). Here we have McCarthy defining AI not by any considered expert definition of intelligence, but rather by what he himself considered to be the most exciting, cutting edge work.

His choice of terms was a deliberate choice to emphasize the work he personally favored. McCarthy later noted that he specifically chose the new term “artificial intelligence” to avoid association with cybernetics. He did not care for the analog approaches in cybernetics (favoring digital computers) and did not want to debate the topic with leading cyberneticist Norbert Wiener.

McCarthy’s area of particular interest was Symbolic AI, an approach to AI that relies on rules, symbolic representations and logical inference. In theory, the program can think and solve problems by reasoning from basic principals. McCarthy believed that if done correctly, this approach would enable us to automate broad fields of philosophy, such as the field of Epistemology (The study of knowledge). In the early days of AI, his view was the one that won out, aided in part by the accelerating advancements in digital computing. In this first wave of AI, other approaches, such as neural nets, and the analog feedback systems of cybernetics were discarded. McCarthy’s term, “artificial intelligence” was not immediately accepted by others in the Symbolic AI space, but McCarthy was persuasive, persistent, and had the support and funding of academic and military institutions behind him. Over time, other areas of research in the space began to rebrand as “AI”.

McCarthy's introduction of "artificial intelligence" may have won the terminology battle, but it created a fundamental problem that persists to this day: What exactly does "intelligence" mean in this context?

Why "Intelligence" Is a Problematic term for AI

The problem is that the term “intelligence” has never had an agreed-upon definition, even in the context of purely human intelligence. It's a concept that scientists across multiple disciplines continue to grapple with, and there isn't much consensus within a given discipline, let alone across them. From psychology to neuroscience to AI research, each field brings its own conceptualization of what constitutes intelligence.

From the perspective of Psychology, we can get a seemingly straightforward definition from the American Psychological Association:

But this definition lands us with more questions than answers if we try to apply it to a non-human system. This definitional problem becomes even more acute when we try to apply the term to non-human systems. What does it mean to “correctly utilize thought and reason”? How could a disembodied system adapt to its environment? Is understanding possible without consciousness? These questions remain largely unanswered, and this is only one of many disputed definitions from the field of psychology alone.

This lack of definitional clarity made McCarthy's use of "artificial intelligence" problematic from the start. Without a rigorous understanding of intelligence itself, the goal of creating "artificial intelligence" was inherently nebulous. This isn't merely a semantic quibble - it has direct consequences for how we develop, evaluate, and talk about AI systems today. When everyone uses the same word to mean different things, meaningful communication becomes nearly impossible. In my next post, I'll explore these different conceptions of intelligence in more depth, examining how interdisciplinary approaches might offer a richer understanding of intelligence beyond the narrow human-centric view.

McCarthy's definitional choices weren't the only consequential decisions made in those early days. In fact, the early history of AI was marked by competing visions for how to create these so-called "intelligent" machines, a competition that would shape the field for decades to come.

The Battle for AI's Soul: Early Schisms

The field of AI was born in controversy, a tradition that has continued to this day. Following the early debates over analog versus digital systems, a new, equally fundamental feud developed over symbolic versus sub-symbolic AI. McCarthy's symbolic approach didn't win universal acceptance immediately. In those early years, this philosophical divide emerged between competing visions of how to create "artificial intelligence." At the core of the this divide was the gradual split between AI and Cybernetics.

On one side stood the symbolists, led by McCarthy and Marvin Minsky, who believed intelligence emerged from rule-based manipulation of symbols and logic. On the other side were researchers like Frank Rosenblatt exploring neural networks (then called “brain modeling” rather than “artificial intelligence”) who drew inspiration from the brain's structure to create learning systems. This approach formed the origin of sub-symbolic AI, which takes the view that intelligence can emerge from the bottom up, through statistical pattern recognition and probabilistic inference. These competing approaches represented fundamentally different philosophies about the nature of intelligence itself and how it may be simulated.

The split wasn't merely academic. By the mid-1960s, funding and institutional support began flowing predominantly toward symbolic AI. This shift wasn't necessarily because symbolic approaches demonstrated superior performance. Rather, they aligned better with the dominant computational paradigms of the time and produced more immediately tangible results. The Defense Advanced Research Projects Agency (DARPA), a major funder of early AI research, favored projects with clearly articulated goals and potential military applications. The earlier shift towards digital computing paired with limited computing resources also favored symbolic approaches, which required less computational power than neural network models.

This early victory for symbolic AI had lasting consequences. As a result “artificial intelligence” became the prevailing term, and for a long time “AI” was synonymous with McCarthy’s symbolic AI.

However, the limitations of symbolic AI became increasingly apparent as researchers attempted to tackle problems requiring common sense reasoning or perceptual understanding The approach excelled at chess and mathematical theorem proving but stumbled on tasks that humans found trivial, like recognizing objects in varied contexts or understanding natural language with all its ambiguities. These limitations would eventually lead to the first "AI winter", a period of reduced funding and interest in the late 1970s. This set the stage for the re-emergence of Neural Networks and the successive rebrands and revivals that have characterized AI's history ever since.

The Legacy of McCarthy's Terminology

The story of AI terminology begins not with scientific precision, but with academic positioning, funding battles, and the ambitious vision of a handful of researchers who believed in the idea of “thinking machines” built on narrow understandings and unfounded conviction. McCarthy's choice to brand this nascent field as "artificial intelligence" was as much a marketing decision as a scientific one, a pattern that continues to this day in how we talk about AI. By understanding these historical roots, we gain crucial context for evaluating today's AI claims.

The field has always been shaped by the interplay between genuine technical innovation and language that promises more than it can deliver. This terminological confusion isn't merely academic. It affects which approaches receive funding, how technologies are designed and deployed, and the expectations we bring to these systems.

In my next post, I'll explore how intelligence is understood across scientific disciplines, examining fascinating approaches to intelligence that rarely enter mainstream AI discussions. By broadening our conceptual toolkit beyond McCarthy's limited framework, we can develop a more nuanced understanding of what these systems actually are and what they are not.

What’s Next?

In next week's post, I'll explore intelligence beyond the human-centric view, diving into interdisciplinary approaches that rarely enter mainstream AI discussions. Here's what you have to look forward to:

The many ways to define intelligence across scientific disciplines

Research directions on alternative approaches to intelligence

Why embodied, ecological, and evolutionary perspectives matter for AI

Debates over the distinction between competence and intelligence

This deep dive into intelligence research will elucidate why AI systems excel at specific tasks yet struggle with seemingly simple ones, and explore broader ideas about what ‘intelligence’ really is.

In subsequent posts, I'll continue this series with an examination of the historical rebrands of AI and today's most confusing AI buzzwords. The series will conclude with a fourth post proposing a practical framework for describing AI systems based on their actual capabilities rather than marketing terminology. By the end, you'll have a complete toolkit for cutting through AI hype and evaluating claims with genuine skepticism.

Further Reading

I drew from a lot of academic papers in my research for this post. Unfortunately, some of them are paywalled (although I was able to gain free access through my public library). For ease of access, I’ve found some additional (non-paywalled) sources of information to share here.

Inventing Intelligence: On the History of Complex Information Processing and Artificial Intelligence in the United States in the Mid-Twentieth Century: This in-depth dissertation explores the historical and philosophical context around the origin of AI

Artificial Intelligence: Reframing Thinking Machines Within the History of Media and Communication: Another source offering a nuanced understanding of how the field of AI emerged.

Cybernetics, Automata Studies, and the Dartmouth Conference on Artificial Intelligence(Paywalled): A frequently cited historical analysis of the separation of the field of AI from its predecessors and the surrounding events.

Cybernetics: from Past to Future: A deep dive into the history of Cybernetics

Surfing the AI waves: the historical evolution of artificial intelligence in management and organizational studies and practices: The history of the multiple waves of AI hype and disillusionment.